Performance planning: testing roadmaps and frameworks

Opinion

In the latest of this digital media planning series, we’re going to examine testing.

Welcome back! In this instalment of Performance Planning, we’re talking testing. Remember your hypothesis, methodology, and conclusion layout from science classes at school..?

Testing is key to improving performance, however in a landscape which is ever-changing (available technology, measurement approaches, consumer sentiment, public finances; the list goes on), robust insight can be difficult to achieve. (“Well, our click-through-rate did increase, but come to think of it, we also released our new product that week as well…”).

It can also feel like you constantly seem to be testing, but never actually learning anything, with staff turnover and remote working making it even more difficult to build a cumulative view of the insights you’ve painstakingly gathered.

This is where roadmaps and frameworks come in. I know, I know, not the most exciting prospect, but I can almost promise that you’ll feel better about your testing efforts with these two functions in place.

For the avoidance of doubt, within this article Roadmaps and Frameworks are defined thusly:

- Roadmap: A future-facing timeline depicting upcoming tests and relevant events. Looks a bit like a calendar.

- Framework: A table consisting of the test details; to be used in test planning for due diligence, and following the test for the documentation of the findings and conclusion.

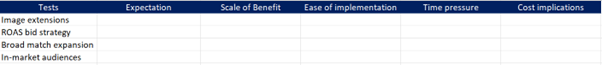

This wouldn’t be the series it is without showing you how to create each of these, so let’s start with the roadmap. The first thing you’ll need to do is gather a list of all of the tests you want to run. Pop them in an Excel sheet and add the following column headers:

Against each of the features you want to test, detail what your expectation is of this test – why do you want to test it? Your table will then look a bit like this:

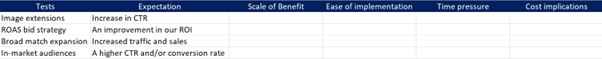

Now we’re going to colour-code the rest of the columns in order to best-prioritise our tests. The column headers work like this:

Scale of Benefit: How broadly or deeply would a positive outcome be felt across the campaign or broader business? Will it ultimately impact your bottom line, or will the effects be limited to engagement metrics for one solitary campaign? If a successful test would impact your overall performance or your business as a whole, make the cell green. If the impact would be minimal and the test is simply for a marginal gain, make the cell red. If you’re not sure, if the scale of benefit depends heavily on the test results, or if it’s somewhere in between, make the cell orange.

Ease of implementation: Some testing areas are tricky due to the nature of the setup required. For example, if you were to test custom landing pages and your web development resource is limited, the test is going to be a little more complex to orchestrate. In the instance of applying in-market audiences however, this test can be put in place in minutes within the Google Ads interface. In this column, red would mean ‘this is going to be a bit painful to get in place’. Green is for super easy ‘I can do that from my own laptop in 5 minutes’ tests, and orange is for anything that will take a bit of co-ordination, but shouldn’t be too much trouble.

Time pressure: Is there a reason this test would need to run sooner than others? For example, a test pertaining to promotional messaging must take place whilst that promotion is live, therefore this cell would be green. Red is used here for tests without any time pressures, and orange should be used for ‘there’s no specific time pressure, but we’d really like to do it sooner rather than later’.

Cost implications: If the test doesn’t incur incremental costs then this cell should be green (for example, image extensions require no additional spend so has no cost implications). For tests which require additional budget sign off, this cell should be red. For tests which could incur incremental costs within your existing budget (E.g. broad match expansion which could make your unit cost increase), make this cell orange.

Once you’ve colour-coded your table of tests, it will look something like this:

![]()

This creates a kind of ‘testing heatmap’, enabling you to easily prioritise testing objectively. For example, whilst a return on adspend (ROAS) bid strategy test has no time pressure, all other columns are green, meaning this test would be broadly beneficial, easy to implement, and ‘free’ in terms of marketing budget.

Whilst the broad match expansion has a time pressure (due to a need for sales volume increase), the scale of benefit is questionable, the expansion would take some time to implement, and there would be cost implications due to the often pricey nature of broad match keywords, so this may be something we choose to test during a ‘lull’.

Based on the above colour-coding, our testing priorities are thus:

1: ROAS bid strategy

2: In-Market Audiences

3: Image Extensions

4: Broad Match Expansion

If in hindsight you don’t feel this prioritisation is correct, review your colour-coding and add columns if necessary to capture additional context. For example, it may be appropriate to have a ‘Stakeholder Buy In’ column to highlight tests which are more urgent due to the enthusiasm of others, or transversely may be trickier due to a lack of buy-in from key parties. If you want a ‘proper’ roadmap, these tests can then be formatted against a schedule within the context of your other web/product/marketing activities like this:

This makes it easy to check if your tests are clashing with anything that could mess up the results (E.g. website downtime, or a market-leading offer that will artificially enhance performance outside of what you’re actually trying to test).

And that leads us on very neatly to testing frameworks. Unlike the roadmap which is an overall view of your testing efforts, the testing framework template should be completed for each individual test you run, and also filled out with final results once the test has concluded. If you’ll allow the colloquialism, frameworks are used to ‘check yourself before you wreck yourself’. Here’s the template I like to use (if you are working ‘in-house’ you’ll want to replace the ‘Client Contact’ row with ‘Senior Stakeholder’):

This framework serves multiple purposes:

1: To ensure your test is hygienic – this means we can trust the outputs because the test occurred under ‘clean conditions’, for example ensuring the only variable is the feature that you are testing. If you were to test a new audience and increase your budget on the same day, it could prove impossible to identify which of these adjustments impacted performance.

2: To state KPIs and success metrics to avoid subjective outcomes. By stating the success and failure parameters upfront, you’ve already committed to an action based on the test outcomes, so next steps are clear from the off.

3: To document test details for future reference. At some point in the future, you’ll be pulling data across your test period and you will wonder why the data ‘goes weird’. Completed frameworks will begin to form a rich tapestry of your testing history, providing context around performance fluctuations, and whether a given approach should be reappraised, insisted upon, or vetoed.

If you are struggling to fill in the testing framework above for a given test, it may be worth reviewing the testing conditions to ensure you’ve considered the potentially mitigating circumstances.

Next time we’ll be discussing 70 / 20 / 10 planning, and how to structure your media investment with ‘test and learn’ at the core. Until then, happy testing!

Niki Grant is search director at The Kite Factory. Check out her previous instalments of Performance Planning, a guide for marketers and media planners to handle performance media planning and budget optimisation and her other columns for The Media Leader.

Niki Grant is search director at The Kite Factory. Check out her previous instalments of Performance Planning, a guide for marketers and media planners to handle performance media planning and budget optimisation and her other columns for The Media Leader.

Strategy Leaders: The Media Leader‘s weekly focus with thought leadership, news and analysis dedicated to excellence in commercial media strategy. Sign up to our daily newsletter for free to ensure you know what the industry’s leading media strategists and brands are thinking.